99 Bottles... and Then Some

Previously I spent a great deal of time creating a basic API for storing and retrieving bottles in an alcohol collection, as well as locations associated with those bottles and their category. Before I ever started the Rust and Rocket series, I had actually started manually recording our liquor collection in a google sheet. I got through more than 140 bottles before I decided I wanted something a little more robust (and a bit less terrible to work with).

Once I had the working basics of my application, I wanted to get my data I had already created into my new database. Could I manually call the API 140+ times (plus more for adding locations, categories, and sub categories)? Well, yeah, but where is the fun in that! Instead, my goal is create a script to parse a CSV I will export from google sheets and call the API for me in an automated way.

First, let's establish some requirements for my script:

- Should be able to run from the command line

- Should accept a

.csvfile as an argument - Should parse the file into correct models:

- Unique Categories

- Unique Sub Categories (with their required Category connection)

- Unique Locations (called Storage in our application)

- Unique Bottles connected to their correct Categories, Sub Categories, and Storage

- Should create each of the above unique entities in our existing database

- Should account for existence of items

- Should NOT explode on partial failure (entities which are created should not rollback, rather check for existence of the item)

I will probably think of more requirements, but this is enough for us to get started. First, I desire to run this script from the command line. Choosing a language to write the script in is super open ended: almost anything can be run as an executable in a Unix/Linux environment. I decided to continue my foray into the language of Rust. There is even a very CLI (Command Line Interface) focused guide for Rust for the uninitiated to learn quickly! The promise of 15 minutes to a runnable CLI was pretty interesting to me.

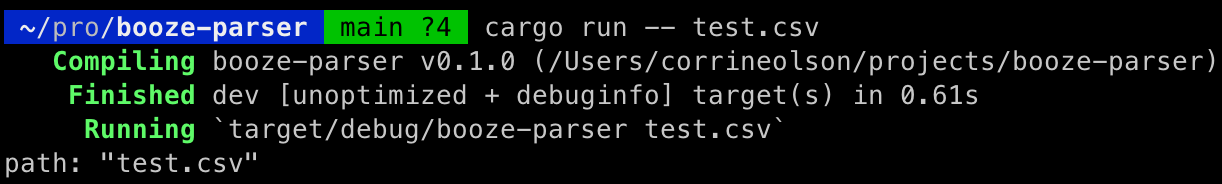

Alright, off we go! First things first, let's use cargo to create a new project by running cargo new booze-parser. Then we can cd into our newly created project directory and test our executable by running cargo run. This yields a "Hello, world!" output on our terminal. Next, let's get it to take in some command line arguments.

I decided to make the parsing of arguments easier by using the clap crate as mentioned in the CLI guide above. One note, however, is if you are using the most recent version (v4.4.17 in my case), and want to use derive on your struct for the arguments, you will need to run cargo add clap --features derive not just cargo add clap. You do not get the derive feature by default anymore!

Now that I have my crate installed, I made this short addition to my main.rs to take in a file path and print it back out on the command line:

use clap::Parser;

#[derive(Parser)]

struct Cli {

path: std::path::PathBuf,

}

fn main() {

let args = Cli::parse();

println!("path: {:?}", args.path)

}

Function in main.rs to take command line path and print back out.

Let's test it by running the following:

cargo run -- test.csvI had to put this in a code block to avoid the two dashes becoming an em dash by default. Ha. Even an inline code formatting was not enough to avoid the conversion.

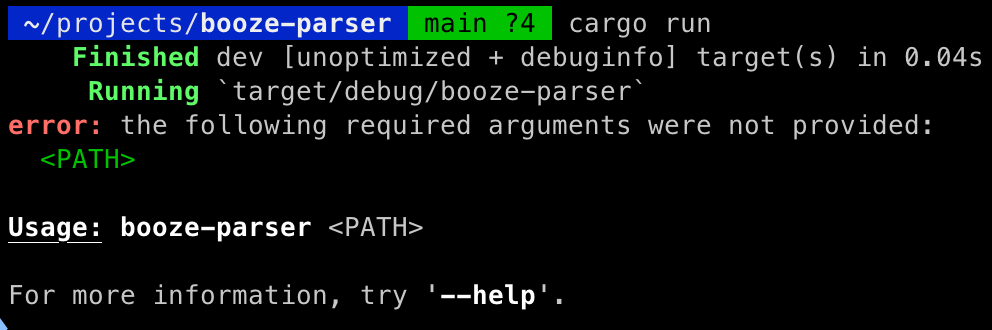

If we do not provide a path, our application throws a nice error due to using the clap crate:

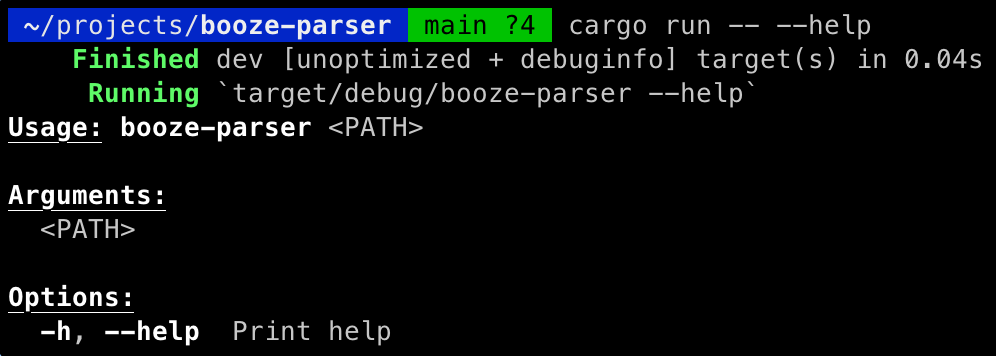

Notice we also get a prompt to use --help for more information on the application. This is another feature of the clap crate. Here is the output of passing --help to our app:

I really like how easy this was. Honestly, clap was probably overkill for our simple parser, but I could see it being super useful to a complex CLI application. Alright, great! We can take a file in. Next up: parsing a file!

I ended up making quite a few changes based on looking at the csv crate from Rust. Their documentation is excellent! One of the major differences to our main.rs is pulling our main business logic out of the main function. This allowed us to use Result for our main logic, allowing us to implement some error handling (in addition to our super basic argument errors provided by the clap crate).

use clap::Parser;

use std::{error::Error, process};

#[derive(Parser)]

struct Cli {

path: std::path::PathBuf,

}

fn boozeparse() -> Result<(), Box<dyn Error>> {

let args = Cli::parse();

let mut rdr = csv::Reader::from_path(args.path)?;

for result in rdr.records() {

// The iterator yields Result<StringRecord, Error>, so we check the

// error here.

let record = result?;

println!("{:?}", record);

}

Ok(())

}

fn main() {

if let Err(err) = boozeparse() {

println!("error running booze-parser: {}", err);

process::exit(1);

}

}

main.rs after splitting core parsing logic out from main

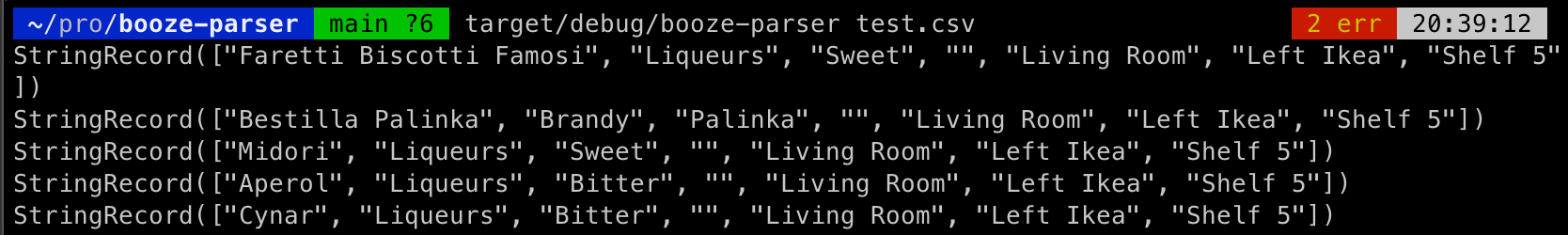

I created a short excerpt (5 lines) from my larger csv file to test with. I would expect my application to print each line of the file out. I am not currently sure what the default handling of the csv crate is for headers... let's find out! Notice I also ran the executable from the debug folder this time instead of passing arguments to cargo run.

It reads files! And upon further reading of the documentation for the csv crate, and looking at the output of the reader, apparently the csv crate assumes the first row is always a header, and provides a method to access them whenever needed via Reader::header.

Next step is parsing our data into the correct structs and making sure to de-duplicate in order to call our API for ethel. My current thought is to go through the records, and start collections of each type (Bottle, Category, Sub Category, and Storage). Then after each record, create the necessary values if they don't already exist. My plan would be to store the id when I do a create call, and store it in a collection (probably a map). That way, if I have already created a category, I have the id for the subcategory creation or bottle creation call. This also means I only have to go through the data once, rather than multiple times.

If this bulk creation operation were something I planned on doing regularly, implementing a batch crate API in Ethel would be a good idea. I only plan on doing this once, however, to convert my existing spreadsheet data into my new database.

But first! Let's finish getting our data parsed out to start. In a real world example, I would make a client library for Ethel. This would include modules and a client for interacting with my API, and define the models for serialization and deserialization. Given I don't feel like it (yet), I would rather just copy paste my models.rs from our original Ethel project (look back on the structs we created for more of a refresh).

We don't need the Diesel decorators, as we are not storing anything on our booze-parser. Instead, we can remove most of the decorators, and only leave the serde ones for Deserialize and Serialize. We should also reconsider the headers for our csv for ease of use: we can create a struct for the records to deserialize our csv and make it easier to use. Let's add a new Record struct to our models file:

#[derive(Debug, Deserialize)]

pub struct Record {

pub bottle: String,

pub category: String,

pub sub_category1: String,

pub sub_category2: String,

pub room: String,

pub storage: String,

pub shelf: String,

}excerpt from models.rs copied from Ethel and modified, Record struct

Additionally, let's update our test.csv to match:

bottle,category,sub_category1,sub_category2,room,storage,shelf

Faretti Biscotti Famosi,Liqueurs,Sweet,,Living Room,Left Ikea,Shelf 5

Bestilla Palinka,Brandy,Palinka,,Living Room,Left Ikea,Shelf 5

Midori,Liqueurs,Sweet,,Living Room,Left Ikea,Shelf 5

Aperol,Liqueurs,Bitter,,Living Room,Left Ikea,Shelf 5

Cynar,Liqueurs,Bitter,,Living Room,Left Ikea,Shelf 5test.csv

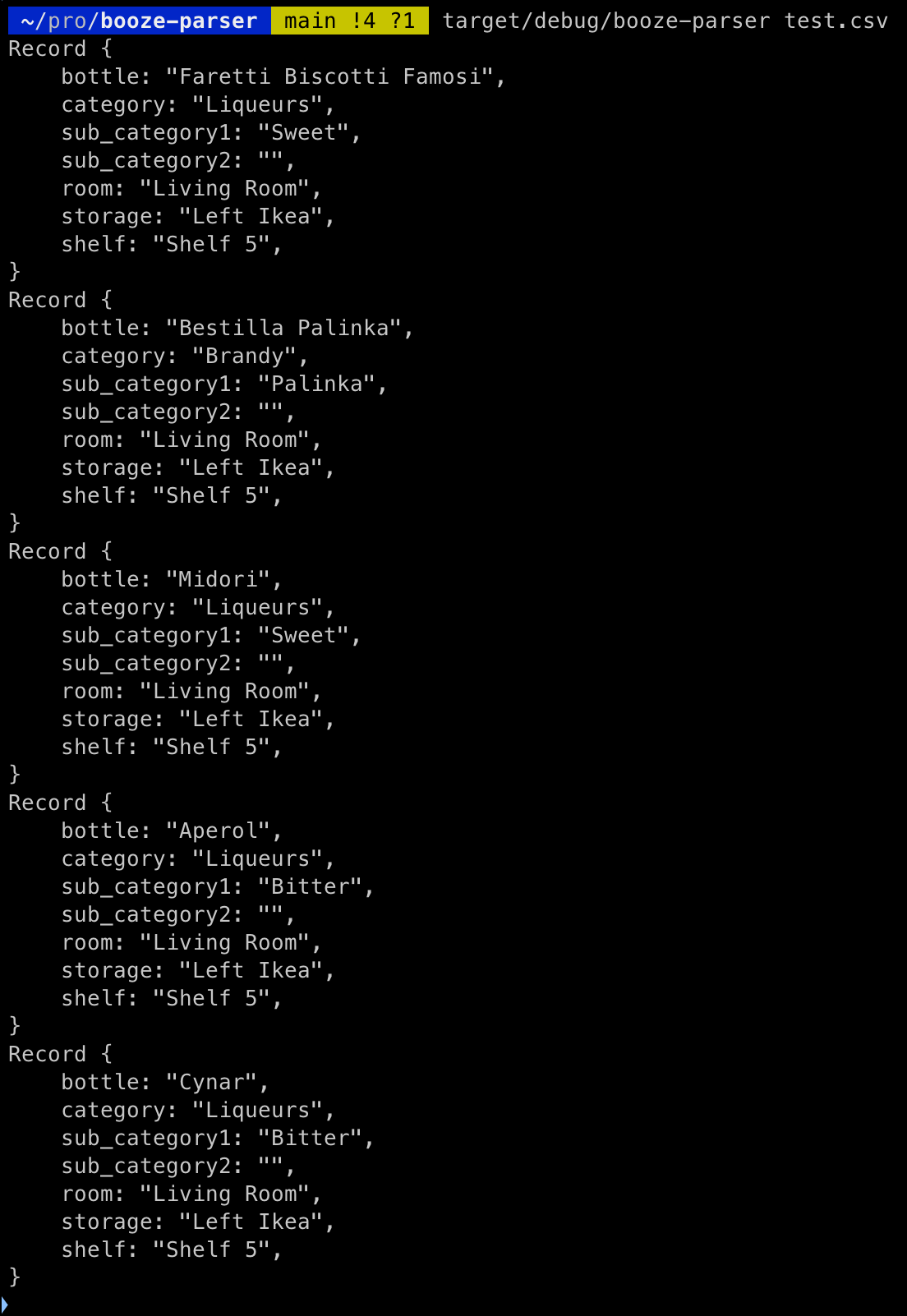

Great! Now let's update our csv parsing in our main.rs to use our new Record struct, and don't forget to add a reference to our models we copied over!

pub mod models;

use crate::models::{Record};

fn boozeparse() -> Result<(), Box<dyn Error>> {

let args = Cli::parse();

let mut rdr = csv::Reader::from_path(args.path)?;

for result in rdr.deserialize() {

let record: Record = result?;

println!("{:#?}", record);

}

Ok(())

}excerpt of main.rs for boozeparse function

Alright, finally let's build and run our updated code to see if our data properly deserializes.

Now we have a nicely formatted set of data we can use to call our API locally and create data! Next time, we will start calling our API using some kind of client... will it be a high level HTTP client? Or will I just use curl. Find out next time, right now I want to finish this beer...

Buoying my Fun with Czech Dark Lager

I really enjoy beer from Buoy Beer Co! They are PNW local out of Astoria, Oregon. I drink a lot of their beers: their regular lager, their kolsch, their pilsner, all delicious! Throughout the year, they release some rotating series, including my current libation: their Czech Dark Lager.

Did I mention it comes in "Tall Buoy" cans? I love my beer by the pint! Sadly, this particular beer has disappeared from their website, which means I probably won't see it at my local store much longer. Alas, enjoy while I can!

True to the lager style, the beer is cold fermented then lagered for five weeks, leading to a clear, clarified, brew. The lager is brewed with German maltsL Weyermann Carafa, Munich, and Weyermann Floor Malted Pilsner.

The beer is very dark in the glass, with roasty toasty nutty flavor notes, a hint of sweetness, with a bitter (not unpleasant finish). I like how cleanly this finishes vs. other dark beers I enjoy (looking at you stouts... sometime you hangout too long... like the guy who won't leave the party even when the host wants to go to sleep... you know who you are).

I would probably give this beer a 3.75/5, super solid, if I see it I buy it, and I always enjoy it. I wouldn't go on a hunt for it specifically though. Try it while you can!